Learn to Binarize CLIP for Multimodal Retrieval and Ranking

Binary embedding is a powerful technique that allows us to convert high-dimensional data into binary vectors. This process allows for efficient storage and faster computation, especially for large-scale multimedia retrieval tasks. Compressing float32 to binary can reduce memory usage by 32 times. This blog explores the integration of binary embedding within the CLIP framework, aiming to optimize multimodal retrieval and ranking performance. We delve into different binary quantization functions, training methodologies, and how they impact the performance.

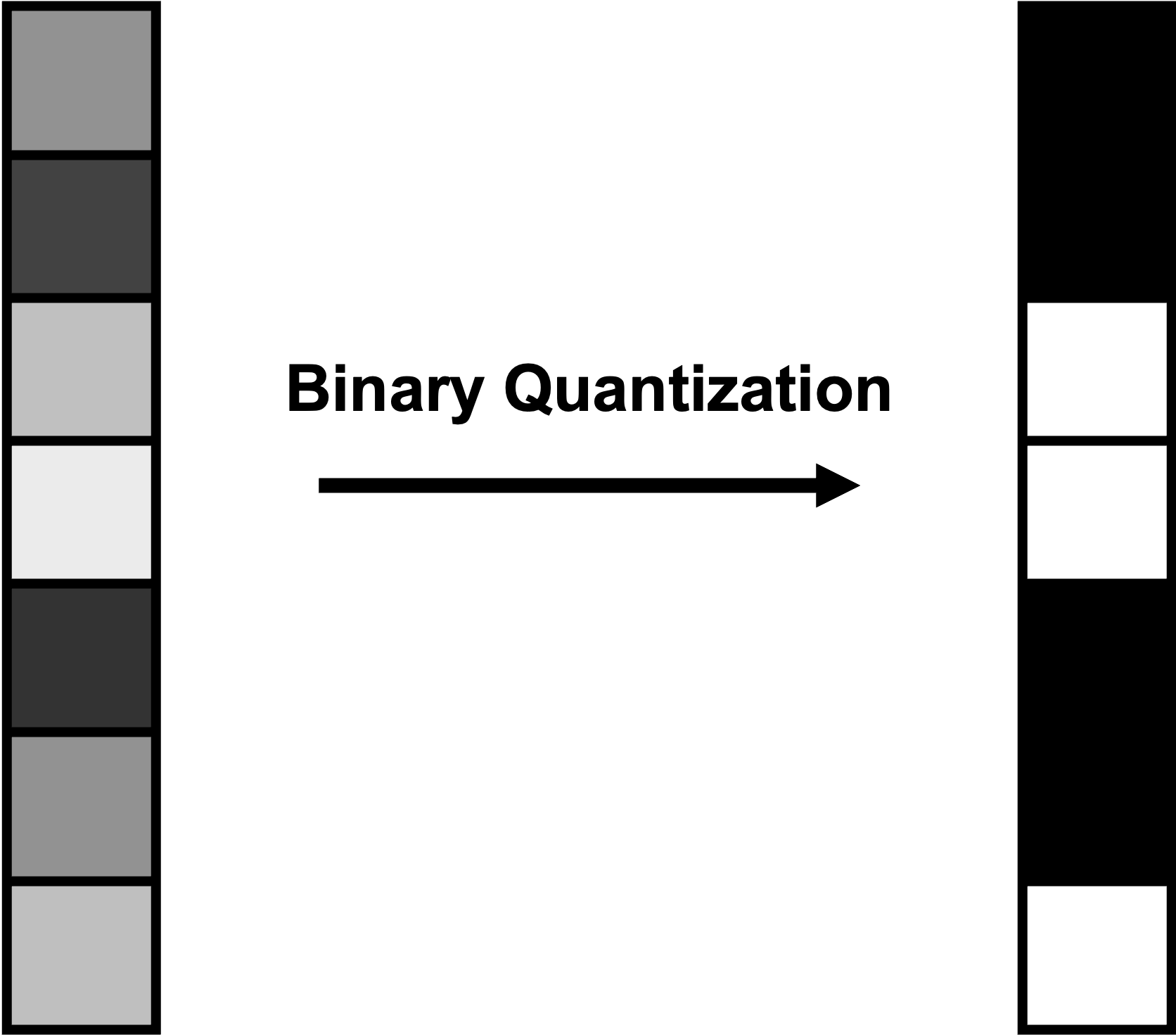

Test-time Binary Quantization

Binary quantization commonly involves converting float embeddings during the inference stage. This is achieved by simply applying the unit step function to each dimension:

This process results in embeddings with the same dimension in bits. For instance, quantizing 512-dimension float32 embeddings would yield 512-dimension binary embeddings in bits. Python libraries such as Sentence-Transformers further compress these embeddings into bytes using numpy.packbits. This technique reduces the embedding dimension by 8 times using uint8. Consequently, a 512-dimension embedding becomes a 64-dimension embedding with an uint8 data type.

We applied the test-time binary quantization to embeddings of our CLIP-based model GCL fine-tuned on a subset of GS-Marqo-10M. For more information on GCL and the dataset, refer to our blog. We measured the multimodal retrieval and ranking performance using normalized Discounted Cumulative Gain (nDCG).

The results indicate that binary quantization at test-time significantly impairs CLIP's retrieval and ranking performance, and packing bits is not effective at all. We utilized cosine similarity to evaluate relevance, and applying Hamming distance to binary embeddings yields similar outcomes (less than 0.003 nDCG difference). This degradation is expected and inevitable since the binary embedding has 32 times smaller granularity. However, the result is not optimal because the pre-trained GCL has not learned to effectively differentiate between positive and negative pairs of samples with 32 times less information.

Learn to Binarize CLIP

To enhance performance, we integrated binary quantization into the training process. Since the standard binary quantization is a non-differentiable operation, we used pseudo-quantization to approximate the binary quantization, employing continuous functions in place of the unit step function. We considered two functions tanh and sigmoid:

We applied a large scale to the input before the functions (i.e., \( \text{tanh}(\alpha x) \) and \( \text{sigmoid}(\alpha x) \) to better approximate the standard quantization. During training, we calculated the original GCL loss and added the same loss computed from pseudo-quantized embeddings, which are the embeddings passed to either activation function (tanh or sigmoid). The final loss is calculated by adding these two losses. This process enables the model to learn to use both float and binary embeddings. During the inference, we use the sign function if the tanh function was used during training, and the step function if the sigmoid function was used during training.

Tanh vs. Sigmoid

Since tanh and sigmoid have different rates of changes at \(x=0\), we applied 4 times larger scale to sigmoid because the derivative of sigmoid at \(x=0\) is 0.25 and the derivative of tanh at \(x=0\) is 1 (i.e., we compare \(\text{sigmoid}(4\alpha x)\) and \(\text{tanh}(\alpha x)\)). This equalizes the rate of change at \(x=0\) for a fair comparison.

We have observed that the model using the sigmoid function outperforms the one using the tanh function across all splits. This could be because binary embeddings of D-dimension with -1 and 1 exhibit a maximum of \(D\) levels of difference when utilizing cosine similarity. Meanwhile, binary embeddings with 0 and 1 display a maximum of \(D \times N\) difference, where \(N\) represents the number of non-zero elements in the binary embeddings. A simple illustration of this concept is provided below:

When using binary of -1 and 1, the binary vector at the top (i.e., [1, 1, -1]) has the same cosine similarity for both bottom binary vectors (i.e., [1, -1, -1] and [1, 1, 1]). When using 0 and 1 instead, the cosine similarity is different across two pairs.

We provide a performance comparison of binary quantization when GCL is trained using sigmoid and without training (test-time).

When GCL is trained using the pseudo-quantization loss with sigmoid activation, the performance in the following areas is maintained at corresponding percentages of float32 embeddings: 87% for in-domain, 88% for novel query, 93% for novel document, and 88% for zero-shot.

Impact on Float Embeddings

We aim to use both float and binary quantized embeddings during inference by adding a pseudo-quantization loss to the original training loss. This allows for precision flexibility with the same model. However, this change in training loss could also affect the performance of the float embeddings. As a result, we compare the performance of float32 embeddings with and without the pseudo-quantization loss.

Interestingly, the performance for in-domain and novel queries slightly decreases, while an improvement is observed in the novel document and zero-shot performance. A more comprehensive analysis of the performance difference is required to understand the gap, yet we can conclude that the performance with float embedding is well preserved, averaging 99.7% across splits.

Hamming Distance vs. Cosine Similarity

The original CLIP measures the cosine similarity between text embeddings and image embeddings. When these embeddings are pseudo-quantized using sigmoid activation, we can apply the negative Hamming distance instead of cosine similarity by measuring the number of differing bits. The negative sign is necessary because a higher Hamming distance indicates lower similarity.

During the inference stage, this can be easily done. However, during training, we need to approximate the Hamming distance as it is a non-differentiable measure. We approximate it with negative \(l_1\)-norm of the difference between two vectors, expressed as \(-\sum_{i} \vert v_1[i]-v_2[i]\vert\), where \(v_1\) and \(v_2\) are pseudo-quantized binary embeddings.

The result suggests that the Hamming distance is marginally better than cosine similarity. However, the Hamming distance only works with binary vectors. This means that when you need to average two binary vectors and use the Hamming distance, it cannot be directly applicable.

Binary Quantization Scale

The pseudo-quantization process, using both sigmoid and tanh functions, requires the quantization scale \(\alpha\) to approximate the discontinuous binary quantization. We here analyze the impact of the scale on performance for both tanh and sigmoid functions relative to the float32 embedding performance.

The results show that the overall performance is affected by the quantization scale. Trade-offs between different splits are observed for both activation functions when the quantization scale is adjusted. It enhances performance on some splits but impairs it on others. The optimal strategy would involve prioritizing a significant performance metric and experimenting with various scales to identify the most effective one.

Discussion

One limitation of this blog is that we only considered a first-stage retrieval system. The performance can be further enhanced by retrieving candidates using binary embeddings and subsequently reranking them with float embeddings. Furthermore, even though we employed the pseudo-quantization loss in training GCL, a performance gap still remains. This gap is unavoidable due to the loss of precision. Therefore, anticipating a performance level equivalent to using float embeddings would be unrealistic without introducing additional changes in models.

Conclusion

We showed that test-time binary quantization can significantly impair the performance of GCL, and incorporating binary quantization into the training phase can notably improve outcomes. The use of sigmoid activation consistently outperforms tanh activation across all splits, which can maintain 87-93% of the float embeddings' performance. Furthermore, the performance of float embeddings is well preserved even when the pseudo-quantization loss is added during training, averaging at 99.7% of float embeddings’ performance across all splits. While the use of Hamming distance slightly outperforms cosine similarity for binary embeddings, its use is somewhat limited. Lastly, it's crucial to remember that both tanh and sigmoid are sensitive to the quantization scale across all splits. Therefore, careful experimentation is required to determine the best performing scale.

%20(1).png)

.png)

.png)

.png)