How to Build High-Performance E-Commerce Site Search at Enterprise Scale

Read Full Story

.png)

Marqo is collaborating with BluelightAI to create an industry leading ecommerce solution that enhances embedding model fine-tuning by automating the performance analysis of queries to products on your website.

Marqtune (available on Marqo Cloud), is an embedding model training platform that enhances product search and recommendations, tailored specifically for your data. BlueLightAI illuminates patterns in how your queries are performing, and where to make targeted improvements. By combining these tools, ecommerce businesses can confidently fine-tune models to deliver more precise search results, ultimately enhancing customer experience and driving revenue.

The motivation behind this approach is to help ecommerce teams maximize the impact of their search optimization efforts by focusing on high-value product categories rather than isolated, low-performing queries. Instead of spending time troubleshooting individual search issues, which can be narrow in scope and limited in effect, Marqtune and BluelightAI Cobalt allow teams to identify and target entire product categories that drive business results. This is useful because it provides a strategic way to improve search relevance where it matters most, directly linking search performance to revenue.

With Marqtune and BluelightAI, we’re able to estimate the potential revenue impact for each query within a category, giving us insights into the financial value of targeted improvements. For example, enhancing relevance in a category like "Women’s comfortable shoes" could be associated with an estimated revenue increase. By analyzing these high-impact categories, we can also identify actionable steps for improvement—such as expanding dataset coverage—to ensure the model captures all relevant data for even better results. This strategic focus enables teams to prioritize optimizations that not only boost search accuracy but also directly contribute to business growth.

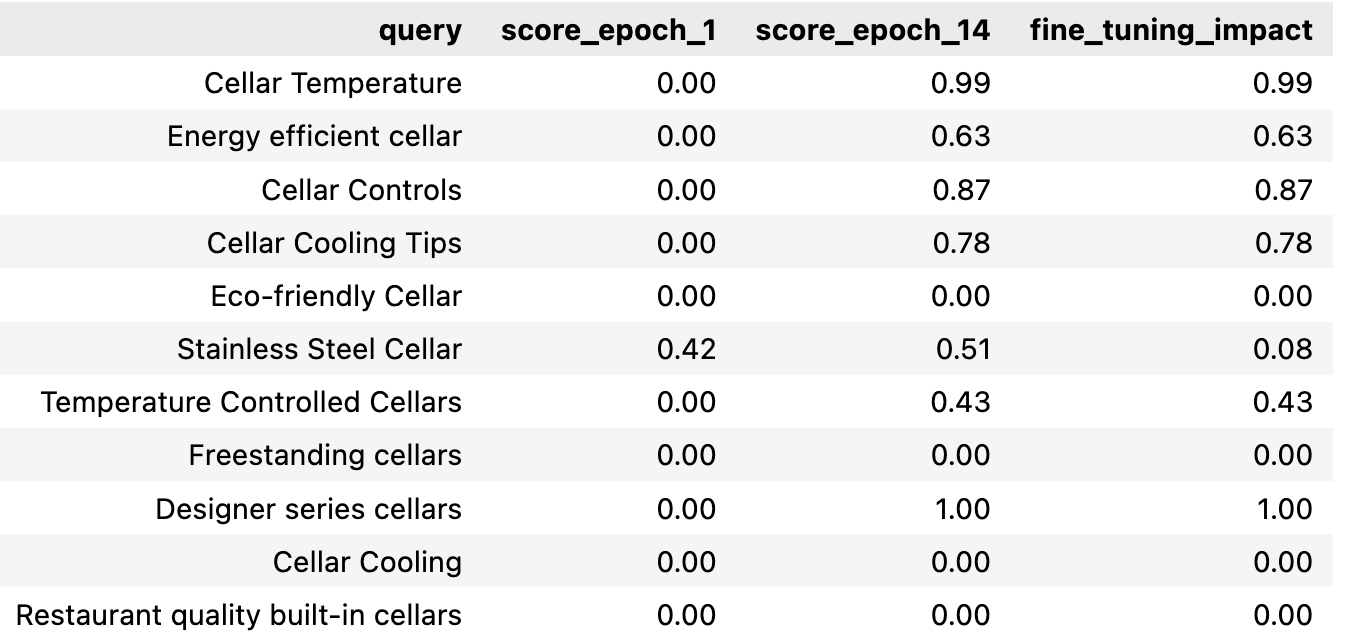

In this blog, we’ll show you how you can obtain information like in Figure 1 below; impact per query from fine-tuning, as measured by an industry standard performance metric like NDCG (or your business or technical metric of choice). This graph is valuable as it helps identify areas where fine-tuning improved performance and where further adjustments might be needed to address gaps.

On top of this, we’ll also be demonstrating how you can generate group labels for your queries so that you don’t have to analyze one query at a time. Why is this important? Single query analysis is too slow to drive meaningful impact on your business outcomes, and it risks missing statistically significant trends hidden within your dataset—especially when you have millions of data points to consider.

In this example, we fine-tuned an e5-base-v2 base model on a 100k subset of our Marqo-GS-10M dataset of 10 million products from Google Shopping. This model was trained for 14 training epochs.

We then use BluelightAI Cobalt to produce the table below. This table illustrates the query-groups that improved the most from fine tuning with Marqtune. Here, score_epoch_1 is the base model, e5-base-v2, and score_epoch_14 is the newly fine-tuned model with Marqtune. To do this yourself, all you need is a performance score of your choice that you can associate with each of your ecommerce search queries. Cobalt groups queries by themes so ecommerce teams don’t have to speculate what search trends are in their data. Cobalt uses TDA based clustering to obtain the labels seen in the table below.

For many customers the most important query categories are seasonal, so its crucial for them to able to see that fine-tuning this base model is improving on their core focus areas. In this example, wine cellars are very popular in the summer season and are an expensive product, so we expect to see a high Return on Investment (ROI) after using Marqtune.

Understanding what’s improving in your model is key to gauging the ROI of each training run and your chosen base model. Tracking these improvements helps you see if the effort is paying off—especially in groups that matter most for your specific goals. If the ROI isn’t meeting your expectations in critical areas, it might be time to try fine-tuning a different base model or adjusting your hyperparameters. Making these changes can help align your model’s performance with the results you’re looking to achieve.

The first step is to fine-tune your embedding model with Marqtune, available in Marqo Cloud. For this blog, we fine-tuned a text model, e5-base-v2, which you can do for yourself using this Google Colab notebook.

You can expect to see logs like the following:

We can obtain information about the epoch, data, batch, logit scale, logit bias, text-image loss, text-text loss, weighted mean loss and loss.

You can also perform an evaluation on the base model vs fine-tuned model and expect to get results such as:

If you’re interested in fine-tuning a multimodal model, we also have this Google Colab notebook you can run.

For all of the models we want to compare against our newly fine-tuned model, we should collect the per query performance using the same queries across your models. If you already have your own score per query like a purchase rate or clickthrough rate, you can skip ahead to Loading Your Dataframe. You can then easily compare Marqtune, Marqo Cloud, proprietary, and or open source models.

In this example we compare a Hugging Face base model, e5-base-v2, base model and its fine-tuned checkpoint at epoch 14 after using Marqtune. We opted for 14 epochs in this round, based on insights from our previous 25-epoch training. The Marqtune logs showed the training loss was optimal at 14 epochs, making it the clear choice for maximizing performance.

First, we use Marqo to retrieve results for our set of queries and save the raw results to a file. For speed, it makes parallel queries to the vector database. To do this yourself, run this Google Colab Notebook.

Next, we take the saved retrieval results in the Notebook above and measure how well Marqo’s search results match what we expected. It checks each result’s relevance, scores it based on how well it fits, and saves these scores so we can see if Marqo is doing a good job finding the right matches. This allows us to calculate performance metrics for search retrieval (NDCG, MRR, etc.). To do this yourself, run this Google Colab Notebook.

After running both of these scripts for each of our models, we now have access to two Pandas dataframes, epoch_1_ndcg_per_query_df and epoch_14_ndcg_per_query_df which can be used with BluelightAI to evaluate performance. You can also choose to create your own dataframe with a score column for each of your models, and a single query column.

Let’s now compare our fine-tuned Marqtune model with the base model for the same queries.

Now we can compare the base model at epoch 1 to the fine-tuned model at epoch 14. Note, these models can be Marqo Cloud models, models fine-tuned with Marqtune, your own model, or any other open source model of interest. This comparison helps us understand the impact of the fine-tuning process and measure the performance gains.

Instead of doing a per-query analysis, let’s take a look at the BluelightAI Cobalt group labels which are more scalable.

Cobalt from BluelightAI generates group labels for your queries using advanced natural language clustering. This allows us to identify groups of similar queries for targeted fine-tuning. Cobalt can be setup via pip install, for the latest setup instructions please visit the BluelightAI documentation.

This enables us to observe which groups benefited most from fine-tuning and which may require more effort with Marqtune. For more information on the code used here, visit the Cobalt documentation.

Let’s first take a look at those groups that performed well after fine-tuning.

You have full control over what results you want to focus on. Easily navigate the clustering in the Pandas dataframe:

query_count or score

This produces the following table. Here we highlight groups that improved the most from fine tuning with Marqtune.

Let’s walk through each of the columns in this table.

label: The labelled group queries obtained with BluelightAI Cobalt.query_count: Represents the number of individual queries within each group at the specified level. A higher query count means the group includes more queries that share similar themes or characteristics.level: Indicates the depth of clustering used to group similar queries. Lower levels represent more detailed clusters and higher levels represent broader groups.score_epoch_1: This column shows the model's score for each query before fine-tuning (at epoch 1). A score of 0.0 indicates that the model was unable to retrieve relevant results for these queries initially (NDCG metric).score_epoch_14: This column displays the model’s score for each query after fine-tuning (at epoch 14). Here, a score of 1.0 suggests that the model retrieved perfect or highly relevant results for these queries after fine-tuning (NDCG metric).fine_tuning_impact: This column represents the improvement in performance between epoch 1 and epoch 14 for each query. The "fine_tuning_impact" is calculated by subtracting the score_epoch_1 from score_epoch_14. A value of 1.0 here indicates that fine-tuning resulted in a significant improvement, moving from non-relevant results to highly relevant ones (NDCG metric).For many customers, seasonal queries are a top priority, making it essential to see how fine-tuning a base model with Marqtune can drive improvements in core focus areas. For instance, summer queries related to high-value products like wine cellars—where terms such as "cellars, cellar, cellar temperatures" saw a substantial +0.43 improvement post-fine-tuning—demonstrate Marqtune’s fine-tuning power and potential for a high ROI. Tracking these kinds of gains is key to understanding whether fine-tuning efforts are delivering results where they matter most.

Let’s take a look at this label:

This returns:

We can look at the specific queries inside this label and observe which queries had the biggest fine-tuning impact.

This returns the table:

The queries that show strong performance after fine-tuning provide valuable insights for optimizing your production strategy. For instance, high-performing queries such as those related to cellar products suggest that these items resonate well with your model's improvements. This means you could consider prioritizing or promoting these products, as they are likely to generate more interest and engagement based on the fine-tuned model's enhanced search capabilities.

Conversely, we can also obtain groups that didn't work with the base model, and or didn't change much from fine-tuning. We can quickly find out which kinds of queries have a lot of room to improve.

This returns the table:

Monitoring these improvements helps evaluate the ROI of each training cycle and assess if the chosen base model is the right fit. For areas that show minimal improvement across all 14 epochs, a more advanced base model could be a worthwhile investment to explore within Marqtune. If important revenue-driving products like summer pants and kitchen spice items aren’t seeing the desired gains, opting for a higher-performing base model—and fine-tuning it in Marqtune—might offer the level of improvement needed. This approach ensures that fine-tuning continues to drive value, particularly in high-impact categories, and aligns model performance with your strategic goals.

While this example demonstrates a simple fine-tuning process using Marqtune, we expect that not all queries may show immediate improvement. With continued fine-tuning, you can refine your model and achieve even greater performance gains. Here are a few ways to push your model further:

By making these adjustments and leveraging Marqtune and BluelightAI Cobalt, you can continuously improve and validate your model's performance. Whether optimizing for specific queries or boosting overall accuracy, Marqtune offers the flexibility and precision needed to fine-tune confidently. Keep iterating and watch your model grow stronger over time!

By fine-tuning with Marqtune and validating with BluelightAI, you’re taking a proactive approach to improving and validating your models. Whether you’re optimizing for ecommerce search, personalized recommendations, or other tasks, the combination of Marqtune (and Marqo Cloud) and BluelightAI gives you the power to create state-of-the-art embedding models tailored to your specific needs.