How to Build High-Performance E-Commerce Site Search at Enterprise Scale

Read Full Story

.png)

Meta AI just released Llama3.1, one of the most capable openly available LLM’s to date. With claims that it outperforms GPT-4o and Anthropic’s Claude 3.5 Sonnet on various benchmarks. The models come as a collection of 8B, 70B, and 405B parameter size models with 8B best suited for limited computational resources (like local deployment) and 405B setting new standards for AI.

The three models can be summarised as follows:

As we are building a local deployment, we will be using Llama 3.1 8B. As explained, this model excels at text summarisation, classification and language translation...but what about question and answering?

I created a simple, local RAG demo with Llama 3.1 8B and Marqo. The project allows you to locally run a Knowledge Question and Answering System and has been built based off the original Marqo & Llama repo by Owen Elliot. The code for this article can be found on GitHub here.

To begin building a local RAG Q&A, we need both the frontend and backend components. This section provides information about the overall project structure and the key features included. If you're eager to start building, jump to the Setup and Installation section.

The frontend needs the following sections:

Tying this altogether:

Great! Now the front-end is established, the next (and most important) part is establishing the RAG component.

In this demo, I used 8B parameter Llama 3.1 GGUF models to allow for smooth local deployment. If you have 16GB of RAM, I would highly recommend starting with 8B parameter models. This project has been set up to work with other GGUF models too. Feel free to experiment!

You can obtain LlaMa 3.1 GGUF models from lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF on the Hugging Face Hub. There are several models you can download from there but I recommend starting with Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf. The demo video in the GitHub repository uses Q2_K.

Be aware that the llama cpp library currently needs some adjustment to work with Llama 3.1 (see this issue). We do not need to do anything on our side but please be cautious as this may result in interesting responses!

A huge part of improving Llama 3.1 8B is by adding knowledge to its prompts. This is also known as Retrieval Augmented Generation (RAG). In order to do this, we need to store this knowledge somewhere. That’s where Marqo comes in!

Marqo is more than just a vector database, it's an end-to-end vector search engine for both text and images. Vector generation, storage and retrieval are handled out of the box through a single API. No need to bring your own embeddings! This full-fledged vector search solution makes storing documents so easy and meant the backend component of this project was really easy to build.

Now we’ve seen the key components involved in creating a RAG Question and Answering System with Llama 3.1 and Marqo, we can start deploying locally to test it out!

First, clone the repository in a suitable location locally:

Then change directory:

Now we can begin deployment!

Navigate to your terminal and input the following:

Here, we install the necessary Node.js packages for the frontend project and then start the development server. This will be deployed at http://localhost:3000. To install

npm for the first time, consult the npm documentation.

When navigating to http://localhost:3000, your browser should look the same as the image and video above, just without any text inputted. Keep the terminal open to track any updates on your local deployment. Now, let’s get the backend working!

As already mentioned above, to run this project locally, you will need to obtain the appropriate models. I recommend downloading the models from the lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF Hugging Face hub.

There are several models you can download from here. I recommend starting with Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf as seen in the image below. Note, there is also a “take2” version of this. Feel free to use either.

Simply, download this model and place it into a new directory, backend/models/8B/ (you need to create this directory) in the marqo-llama3_1 directory.

Now, open a new terminal inside the project and input the following:

This navigates to the backend directory, create a virtual environment, activates it, and installs the required Python packages listed in the requirements.txt file.

To run this project, you’ll also need to download NLTK (Natural Language Toolkit) data because the document_processors.py script uses NLTK's sentence tokenization functionality. To do this, in your terminal specify the Python interpreter:

Then, import NLTK:

Once you have installed all the dependencies listed above, we can set up Marqo.

As already explained, for the RAG aspect of this project, I will be using Marqo, the end-to-end vector search engine.

Marqo requires Docker. To install Docker go to the Docker Official website. Ensure that docker has at least 8GB memory and 50GB storage. In Docker desktop, you can do this by clicking the settings icon, then resources, and selecting 8GB memory.

Open a new terminal and use docker to run Marqo:

First, this begins pulling from marqoai/marqo followed by setting up a vector store. Next, Marqo artefacts will begin downloading. Then, you’ll be greeted with this lovely welcome message once everything is set up successfully. This can take a little bit of time while it downloads everything needed to begin searching.

.png)

It's important that you keep this terminal open. This will allow us to see requests to the Marqo index when we add and retrieve information during the RAG process. Note, when the project starts, the Marqo index will be empty until you add information in the 'Add Knowledge' section of the frontend.

Great, now all that's left to do is run the web server!

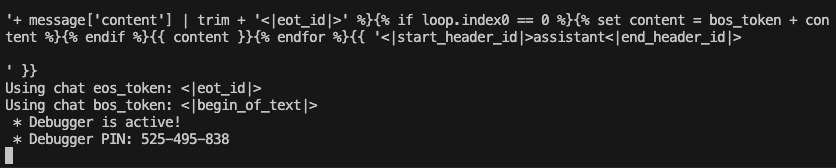

To run the web server, navigate to a new terminal and input:

This starts a Flask development server in debug mode on port 5001 using Python 3. This may take a few moments to run but you can expect your terminal output to look similar to the following:

Along with a few other outputs above this too. When you see this in your terminal output, navigate to http://localhost:3000 and begin inputting your questions to the Chatbot!

Now that you’ve set up this RAG Q&A locally, when you begin inputting information to the frontend, some of your terminals will begin to populate with specific information.

Let’s input “hello” into the chat window and see the response we get:

We receive a lovely hello message back. Let's see how our terminals populated when we made this interaction.

First, the terminal we ran Marqo in will illustrate how it tried to search for the query inside the Marqo Knowledge store with:

This indicates a successful request to the marqo index. Nice!

The terminal you used to run python3 -m flask run --debug -p 5001 will look as follows:

We can see that the local server (127.0.0.1) received HTTP requests. The first one is an OPTIONS request to /getKnowledge, and the second one is a POST request to the same endpoint. Both returned a status code of 200, indicating successful requests.

Inside these HTTP requests, we also see the query which is “hello” and the context from marqo which, at the moment, is empty as we haven’t populated the Marqo Knowledge Store with any information.

Let’s add some information to the knowledge store and see how our terminal output changes.

If we ask Llama what it’s name is, we can get some funky answers out:

Let’s add some knowledge to the Marqo knowledge store. We’re going to input “your name is marqo” as seen in the image below:

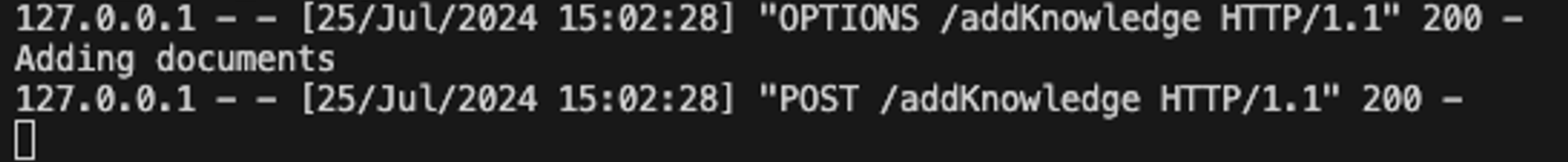

When we submit any knowledge, our terminal will look as follows:

Here, we can see successful /addKnowledge HTTP requests.

When we ask the Q&A bot what it’s name is, it responds correctly with “marqo” and it even gives us the context that we provided it!

Let’s see what’s happening on the back-end.

We see the Marqo Knowledge Store is now populated with the information “your name is marqo” and we can see that Marqo generated a score of 0.682 when comparing this against the query. As our threshold is > 0.6, Marqo will provide this context to the LLM. Thus, the LLM knows that it’s name is marqo.

When running this project, feel free to experiment with different settings.

You can change the model in backend/ai_chat.py:

You can also change the score in the function query_for_content in backend/knowledge_store.py:

This queries the Marqo knowledge store and retrieves content based on the provided query. It filters the results to include only those with a relevance score above 0.6 and returns the specified content from these results, limited to a maximum number of results as specified by the limit parameter. Feel free to change this score depending on your relevance needs.

This project is a very simple Knowledge Q&A with Llama 3.1 8B GGUF and Marqo. The project is aimed to provide a base for your own further development. Such further work may include:

We will be working on some of these features in the future. To be the first notified when these features go live, sign up to our newsletter or star our repository.

If you have any questions, reach out on the Community Group.